In a previous blog post, we discussed how to use a PRISM waveform monitor for color grading in a wide gamut environment. Here, we go back to the basics of human light perception and the impact it has on high dynamic range content creation.

Light manifests as both particles and waves, which means light does not need a medium for travel, unlike sound which does. Light carries energy and varies in wavelength; therefore, light is able to travel in a vacuum. In its particulate manifestation, the particles of light are called, “photons.”

Why is that important? If you’re reading this blog post because it has “HDR” in the title, you’ve probably heard of “high dynamic range and wide color gamut.” The reason the theory of light is important is that we can measure light intensity through measuring photons, which is a measurement of luminance, or dynamic range. The more photons, the higher the luminance. The range from few to many photons, or dark to light, is called dynamic range. High dynamic range (HDR) refers to the ability of an image to have brighter brights and darker darks when compared to standard dynamic range (SDR) images and video.

The other reason that light manifesting as both waves and particles is important is that it’s possible to separate light waves through filters and prisms, which defines the color gamut. The color gamut describes a range of colors within the spectrum of colors, or a color space, that can be recorded or reproduced on a particular device. Note that the human eye can perceive a larger range of colors than is supported by cameras and displays, so various color gamuts have been defined for devices that range from narrow to wide. These defined color spaces ensure that colors are recorded and displayed the same across the imaging ecosystem. Otherwise, different devices would come up with different versions of colors.

In summary, luminance is the defining characteristic of dynamic range, and chrominance is the basis for color gamut. And remember that visible light is just a very small fraction of the wavelengths that exist. Invisible light, for example, includes the broadcast band, radio, radar, microwave, infrared, ultraviolet, x-rays, gamma rays, and cosmic rays.

Human Light Perception

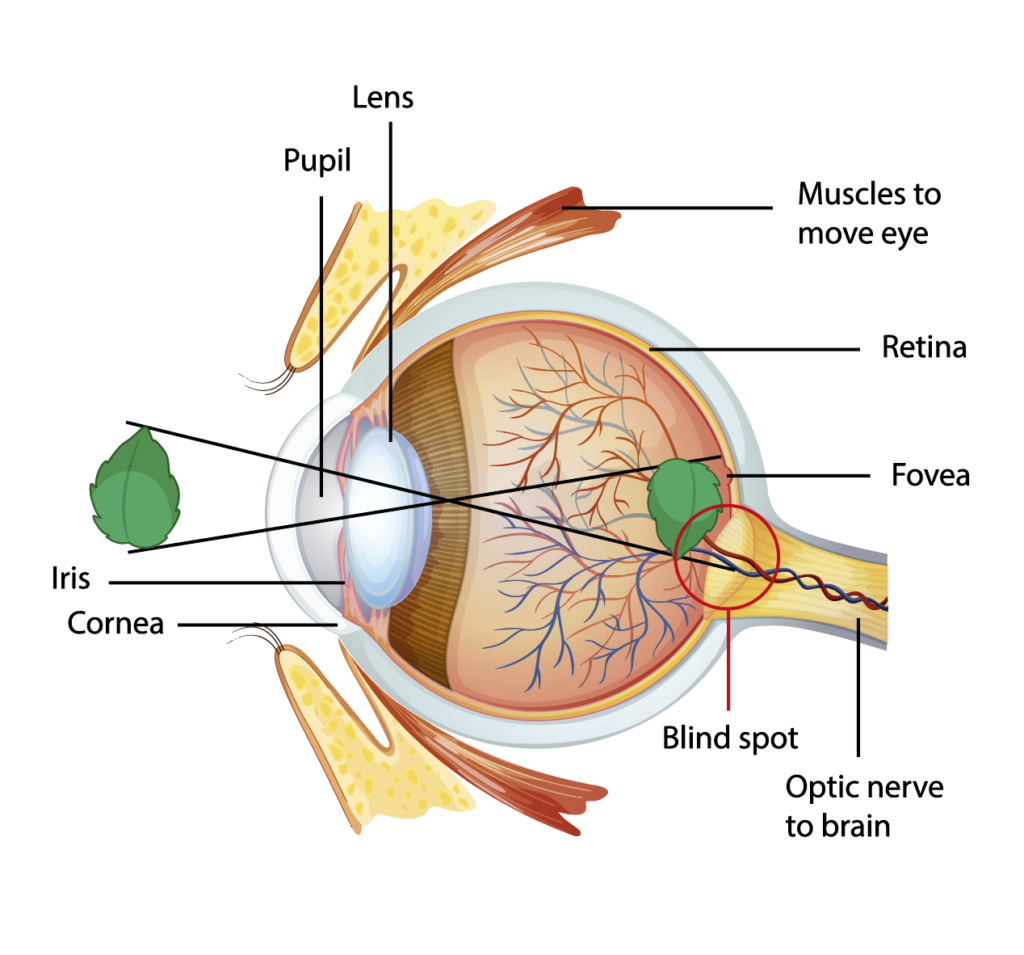

Let’s now dive into how our eyes perceive light. Particles of light enter our eyes through the cornea and end up on the macula in the back. Within the macula is the fovea, which contains a very high concentration of optic receptors. The fovea allows us to recognize details such as those required for reading, for example. The mass properties of light rays are transformed into electrical signals by the fovea, which is how we’re able to see those details.

Structure of the human eye.

Human Eye Structure

Furthermore, there are two types of receptors in our eyes. Rods are sensitive to light while cones are sensitive to both light and three different types of wavelengths – short, medium, and long. These correspond roughly to blue, green, and red respectively. Our brains use the combination of those stimuli to interpolate other colors in the visible spectrum.

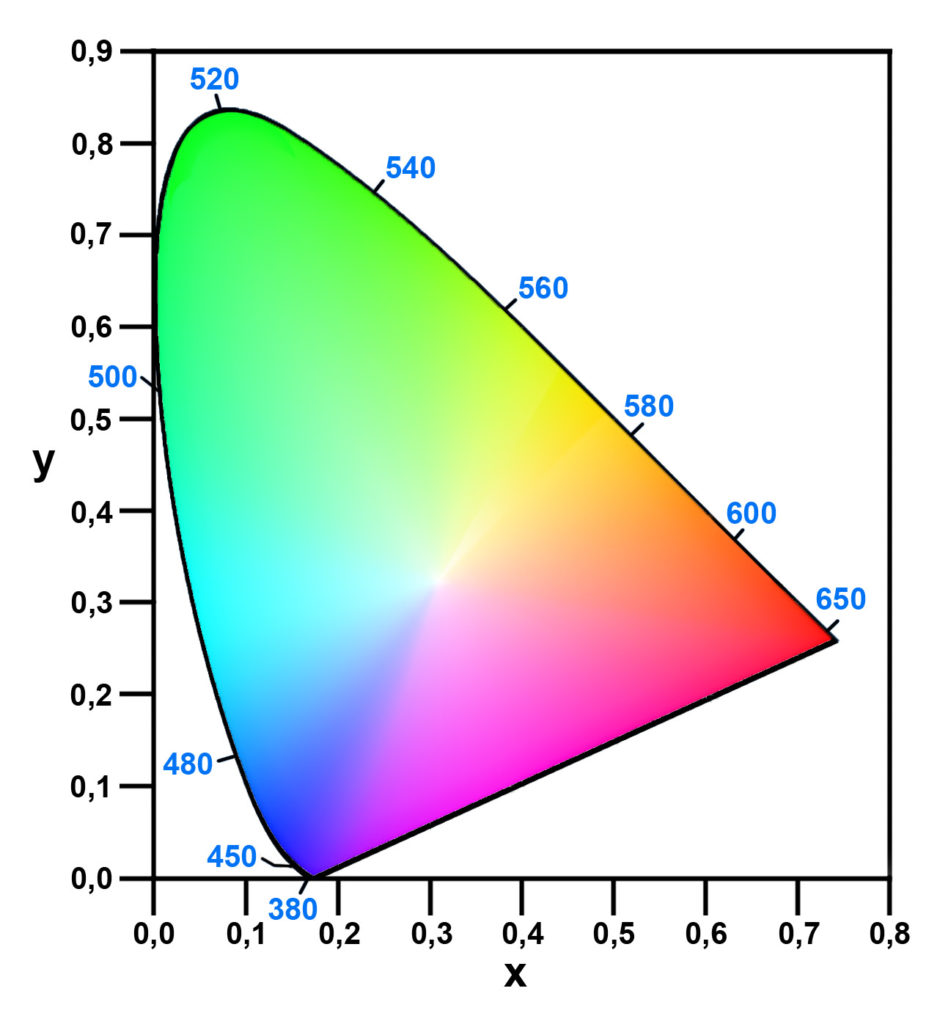

In 1931, the CIE xy model was created to be able to explain color gamut and luminance in a two-dimensional space. These depictions should be very familiar to those who are already working on high dynamic range workflows.

CIE 1931 Chromaticity Diagram showing high dynamic range of human light perception.

CIE 1931 Color Gamut

The X, Y, and Z primary colors are defined within the x and y axes, and luminance is derived into the y axis; thus, separating light in chromaticity and luminance. The combination of these depictions is color volume. An important item to note is that at higher intensity, less color is perceived. Our perception of light intensity is not linear. We are more sensitive to changes in luminance in the lower part (darks and shadows) of actual measured luminance. And the brighter the light gets, the more linear our perception becomes, which affects the way images are produced and post-produced. While film has a similar response behavior when compared to our eyes, digital technology does not respond to light intensity in the same way our eyes perceive it.

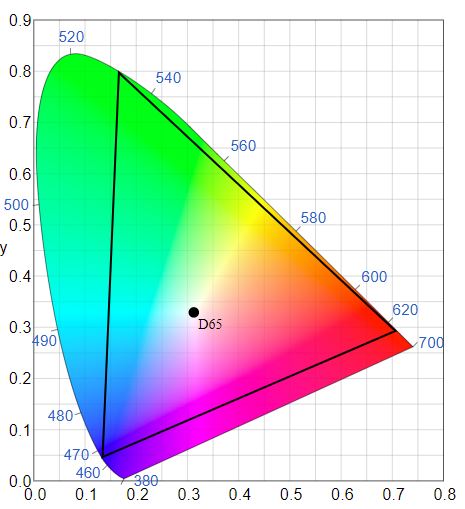

Therefore, several color spaces have been defined including DCI P3 and Rec 709 that cover some of the CIE 1931 chromaticity diagram; however, a wider color gamut as defined in REC 2020 is able to push the limits closer to the human eye.

Rec 2020 Color Gamut for high dynamic range

Rec 2020 Color Gamut

Conclusion

Understanding human light perception is important because digital cameras do not capture light how we perceive it. Digital cameras use color-sensitive filters (red, green, and blue) to prevent light from other wavelengths from hitting the sensor array that transforms light into electric information that is then converted into a set of digital values as opposed to the human eye, which does not digitize the information.

Latitude for digital cameras, measured in f-stops, refers to the range between darkest and brightest, and bit depth refers to the number of values that are possible to capture images. Both of these must be considered when choosing a camera and lens combination for varying lighting conditions. More latitude without enough bit depth means losing information because there are fewer levels to represent the image. More bit depth without enough latitude means captured images are more subject to noise in the dark areas and clipping in the lighter ones.

In summary, for digital cameras, especially for those used in high dynamic range, underexposure can create visible digital noise while overexposure can create clipping or unrecoverable loss of information.

ref: https://kborigin.telestream.net/2022/09/high-dynamic-range-hdr-and-why-light-properties-matter/