The history of High Dynamic Range (HDR)

As I’ve mentioned previously, one thing we know to be constant in our industry is that there is always going to be change. We’ve moved from black and white to color to digital to HD, in the constant desire to bring higher and higher fidelity images to the home. This has never been more true than today. Talk of higher and higher resolutions and frame rates continue to stretch the boundaries of delivery bandwidths – with, some might say, decreasing rates of return.

It’s hard to argue the value of High Dynamic Range TV (HDR), though. Even the least discriminating viewer notices immediately the improvement when they see their first HDR images. Large screen or small, high frame rate or standard, the visible improvement through HDR is immediately apparent. But the details still appear somewhat veiled – our industry is highly experienced in adopting new technologies and simply expect those producing, lighting, shooting and distributing the resultant media to adopt it and excel in their various duties without taking the time to really explain the basic concepts.

This series of blog posts will attempt to help you get a solid grip on this technology. I’d like this to be interactive – if something in the blog doesn’t make sense, please ask me to clarify – I’ll be happy to do so.

What is HDR?

So let’s start by explaining the term. High Dynamic Range: what is a dynamic range, and why do we need a higher one?

Dynamic Range is a term engineers and physicians use to define the full range of values that a piece of technology can deal with. As examples: in film, the range between the lowest amount of light that a piece of film can capture (if you go any darker, you can’t detect the difference) to the largest amount of light that the film can tolerate before it saturates (go any brighter, and you can’t detect the difference – the film has absorbed as much light as it can). In audio, the range from silence (defining silence would be another complete set of blogs, so believe me, silence can be variable!!) to the loudest level that a piece of equipment can record, tolerate or present (play back).

It’s important to note that different pieces of equipment at different stages in the media production/delivery process could, in theory, have different dynamic ranges. In that case, the true dynamic range of the system may be limited to the lowest dynamic range component. More on that later – just remember: when you talk about dynamic range or High Dynamic Range, it’s important to specify if you are talking about a system, or an individual component of that system.

If our aim is for a system to reproduce, as accurately as possible, the experience an observer would have if they were actually observing the original event, then we would like for that system to have a dynamic range that is equal to – or preferably slightly greater than – the dynamic range that the observer would encounter where they actually at the point of the event.

Limitations to HDR

However, reality (and financial requirements) often mean that we have to limit the amount of information that we can pass through a system, In TV, the upper limit for the dynamic range of the video would match the dynamic range of the human visual system. To date, we’ve fallen far short of that – I’ll explain that in future blogs.

One thing to remember, though – when people talk about HDR, they’re almost always referring to High Dynamic Range and the ability to reproduce more colors. This aspect of video engineering is more correctly called Wide Color Gamut (WCG) – you really can’t do one without the other.

In the next thrilling installment, we’ll discuss the dynamic range of the natural world around us, how that interacts with the human visual system, and the dynamic range of various components in the video path – where they were, where they are now, and where they may be in a couple of years. See you then.

Questions

Q1: What are the differences between Standard Dynamic Range (SDR) and HDR?

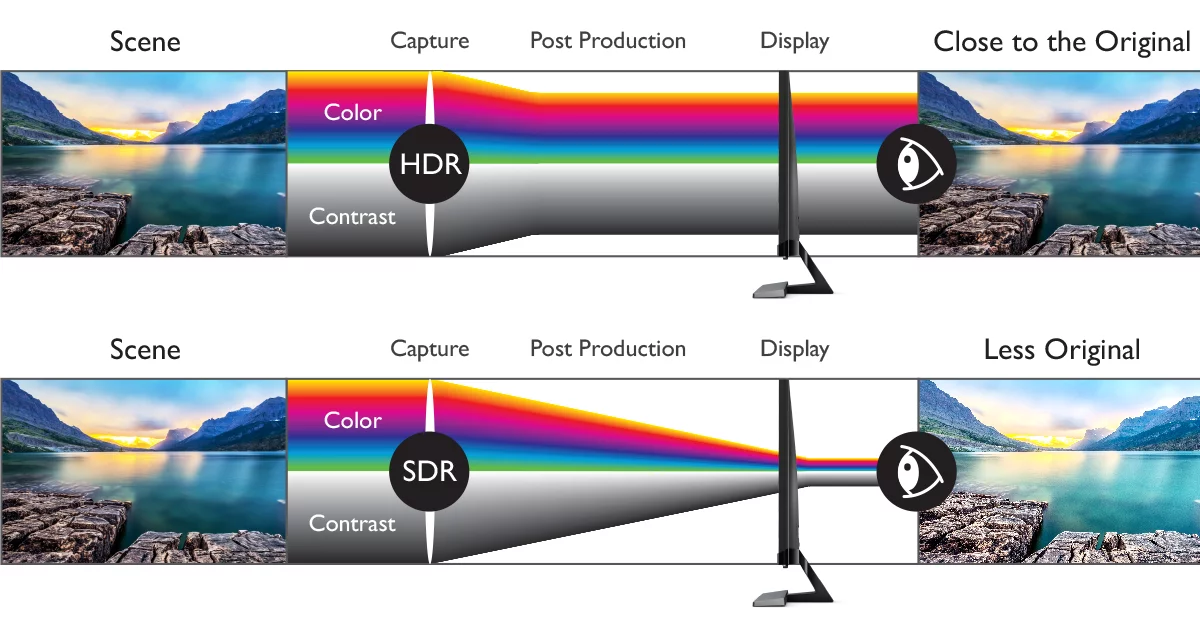

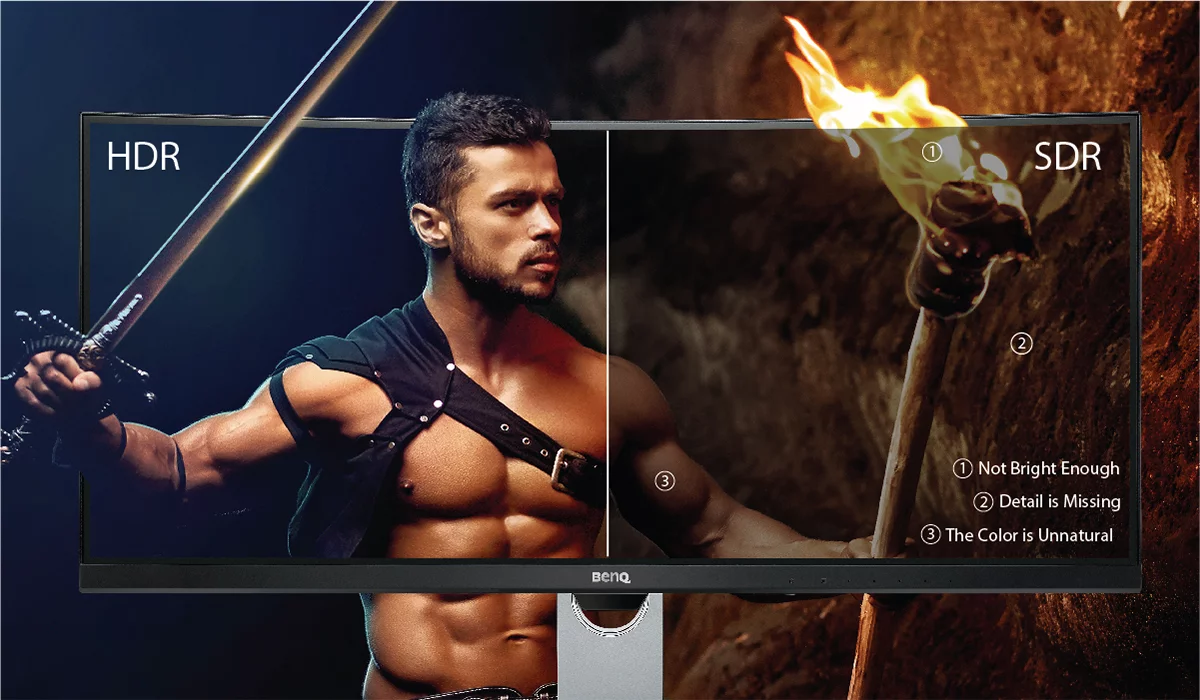

HDR monitors produce realistic picture quality to replicate original images. With this new dynamic range compressing technology, an HDR display fully samples bright-dark tone variation within a scene to avoid overexposure of bright images as well as prevent lost details in dark images. In addition, HDR monitors represent true colors, unlike conventional SDR displays. Catering to consumer preferences, SDR monitors typically display images with an artificially strong hue. However, HDR technology accurately recreates original colors, reproducing true colors even in dark areas as seen in real life by the naked eye. And in order to show perfect picture quality, HDR image reproduction requires filmed content in the master tape to be tuned by professional engineers to the tone and color gamut appropriate for playback on the HDR display.

How Color Relates To HDR

A monitor’s color gamut is the total range of colors it can produce with its red, green, and blue primary colors. Any color that blends these primary colors and lies between them can be produced, but the display cannot create a color that is not inside the triangle between its primary colors, outside its color gamut. Conventional SDR displays are typically limited to the Rec.709 color gamut, an international standard for HDTV formats, but today’s HDR displays expand that triangle to encompass more of the total spectrum of colors human eyes can detect by using special and customized LCDs that accommodate the larger DCI-P3 color gamut. Broadly used by Hollywood and broadcast industries, DCI-P3 offers significantly greater range of colors than the Rec.709 color gamut, as well as a wealth of existing equipment and content that support it.

Brightness And HDR

HDR monitors strive to produce a close replica of extremely bright scenes by using a common Electro-Optical-Transfer-Function (EOTF) which ensures that images will be closer to what human eye see. What this means for human perception is that the range of HDR brightness is significantly greater than SDR. This results in true, subtly variegated greyscale gradation, and clearly distinct fine details in predominantly bright as well as mostly dark scenes.

Q2: Should HDR come with 4K?

Resolution

Common specifications for a display’s resolution include 720p, 1080p, and 2160p or 4K. Indicating the number of horizontal lines on the display, these numbers correspond to the following resolutions including the number of dots across each horizontal line:

• 1080p resolution (FHD or Full HD) is 1920 x 1080 lines = 2 million pixels

• 1440p resolution (QHD or Quad HD) is 2560 x 1440 = 3.6 million pixels

• 2160p resolution (4K, UHD, or Ultra HD) is 3840 x 2160 = 8.3 million pixels

Upgrading to these higher resolutions provide significantly improved picture quality with sharp clarity, as well as enabling larger panel sizes which would look pixelated and blurry with low resolution.

HDR

Apart from resolution, HDR enhances the monitor’s contrast ratio, which is the luminance difference between the brightest and darkest images the display is able to show. A larger contrast ratio allows significantly more details to be revealed between the bright and dark extremes. In addition, by increasing the contrast, HDR displays deliver pristinely bright whites and superbly deep blacks as well as intensely saturated and vibrant colors, resulting in HDR images that appear more realistic and vividly stand out.

Working to enhance the brightness, contrast, color, and detail performance of displays, HDR is an independent technology to the monitor’s resolution. Display panels with HD, FHD, QHD, and UHD resolution can all support HDR, but only when that panel is qualified to HDR standards.

Q3: What is DisplayHDR Certification?

VESA DisplayHDR certification is the world’s first public qualification testing protocol for HDR monitor performance. VESA DisplayHDR certification assures consumers that the certified display can flawlessly render HDR content with brilliant peak brightness and contrast, deeper blacks and clear dark details, and true color tones for naturally realistic video and gaming quality. Please refer to following page to learn more about Display HDR: https://displayhdr.org/performance-criteria/

Q4: What is HDR 10?

HDR 10 is a standard adopted widely by most international monitor brands, as many major companies have implemented the HDR10 protocol. Defined jointly by the Blue-Ray Association, HDMI Forum and UHD Association, HDR10 is the format that supports the compressed transmission of HDR video content. HDR10 is officially defined as the format that supports HDR content by CEA on August 27, 2015. One of the key factors to fulfill the requirements of HDR monitor is the ability to decode files of HDR10 format.

Files of HDR 10 format need to fulfill the following criterion in order to match with the HDR format:

- EOTF (electro-optical transfer function):SMPTE ST 2084

- Color Sub-sampling:4:2:2/4:2:0 (for compressed video sources)

- Bit Depth:10 bit

- Primary Color:ITU-R BT.2020

- Metadata:SMPTE ST 2086, MaxFALL, MaxCLL

**SMPTE ST 2086 “Mastering Display Color Volume” static metadata to send color calibration data of the mastering display, such as MaxFALL (Maximum Frame Average Light Level) and MaxCLL (Maximum Content Light Level) static values, encoded as SEI messages within the video stream.

Q5: What is the difference between DisplayHDR and HDR 10?

DisplayHDR version 1.0 focuses on liquid crystal displays (LCDs), establishing three distinct levels of HDR system performance to facilitate adoption of HDR throughout the PC market: DisplayHDR 400, DisplayHDR 600, and DisplayHDR 1000. HDR 10 refers to a standard adopted widely by most international monitor brands, and it is the format that supports the compressed transmission of HDR video content. For monitors of all levels, they are all required to support the industry standard HDR-10 format in order to properly display HDR content.